Integrating Large Language Models for General-Purpose Robots

Introduction

Significance of AI in Robotics:

- AI advancements drive the rise of general-purpose robots as it enables robots to perform diverse tasks with increased efficiency and adaptability.

Examples of AI-Powered Robots:

- Autonomous Vehicles:

- Example: Tesla’s self-driving cars

- Application: Navigate roads, avoid obstacles, and respond to traffic signals autonomously.

- AI Techniques: Deep Learning

- Reference: Tesla Autopilot

- Service Robots in Hospitality:

- Example: SoftBank Robotics’ Pepper

- Application: Used in hotels and restaurants to greet guests, provide information, take orders, and even entertain.

- AI Techniques: Speech Recognition, Emotion Detection

- Reference: Pepper Robot

- Security Robots:

- Example: Knightscope K5

- Application: Patrol areas such as parking lots, corporate campuses, and malls to detect anomalies, provide surveillance, and deter crime.

- AI Techniques: Anomaly Detection

- Reference: Knightscope K5

Background

3 phases in autonomous robotic systems:

graph LR;

B[Perception] --> C[Planning]

C --> D[Control]

Perception

Planning

Control

Motivation

Challenges with Traditional AI in Robotic systems:

- Rule-based approach, optimisation algorithms, deep learning models

- hard to deal with unpredictable, real-world environments.

- hard to effectively generalize real-world tasks.

Leveraging Large Language Models: GPT, LLAMA, Gemini…

graph LR;

A[LLM]

A --> B[Pre-trained Foundation models]

A --> C[Accept Natural Language Instructions]

A --> D[Process Multi-Modal Sensory Data]

B --> B1([large-scale training])

B --> B2([extensive datasets])

B1 --> B3([generalize knowledge])

B2 --> B3([generalize knowledge])

C --> C1([understand natural language commands])

C --> C2([respond in natural language])

C1 --> C4([user interaction])

C2 --> C4([user interaction])

D --> D1([interpret sensory data])

D --> D3([generate control signals with justification])

D1 --> D4([explainability])

D3 --> D4([explainability])

Research Questions

- integrating LLM Robot - perception, planning, control:

- How can LLMs be effectively integrated into general-purpose robotic systems to improve the interpretation of natural language instructions and multi-modal sensory data for enhanced task planning and action generation?

- improving LLM performance - response generation with domain specific knowledge:

- What are the optimal strategies that allows LLMs to access and utilize domain-specific knowledge in real-time to improve the performance and adaptability of general-purpose robots?

- mitigating LLM hallucinations - error handling / mitigation:

- How can we minimise the risks of inaccurate or false information generated by LLMs, such as mismatches between robots’ actions and LLM-generated explanations, to enhance transparency and trust in human-robot interactions?

Related Works

Before LLM Emergence

graph LR;

B[Perception] --> B1[Scene Understanding]

B1 --> B1a[Object Detection]

B1 --> B1b[Semantic Segmentation]

B1 --> B1c[Scene Reconstruction]

B --> B2[State Estimation and SLAM]

B2 --> B2a[Pose Estimation]

B2 --> B2b[Mapping with Sensors]

B --> B3[Learning-Based Methods]

B3 --> B3a[Supervised Techniques]

B3 --> B3c[Self-Supervised Techniques]

C[Planning] --> C1[Search-Based Planning]

C1 --> C1a[Heuristics for Pathfinding]

C1 --> C1b[Graphs for Pathfinding in Discrete Spaces]

C --> C2[Sampling-Based Planning]

C2 --> C2a[Random Sampling in Configuration Spaces]

C2 --> C2b[Path Connections]

C --> C3[Task Planning]

C3 --> C3a[Object-Level Abstractions]

C3 --> C3b[Symbolic Reasoning in Discrete Domains]

C --> C4[Reinforcement Learning]

C4 --> C4a[End-to-End Formulations]

D[Control] --> D1[PID Control Loops]

D1 --> D1a[Fundamental Method for Operational State Maintenance]

D --> D2["Model Predictive Control (MPC)"]

D2 --> D2a[Optimization-Based Action Sequence Generation]

D2 --> D2b[Application in Dynamic Environments]

D --> D3[Imitation Learning]

D3 --> D3a[Mimicking Expert Demonstrations]

D3 --> D3b[Application in Urban Driving]

D --> D4[Reinforcement Learning]

D4 --> D4a[Optimizes Control Policies through Accumulated Rewards]

D4 --> D4b[Direct Action Generation from Sensory Data]

LLM-powered Robotics

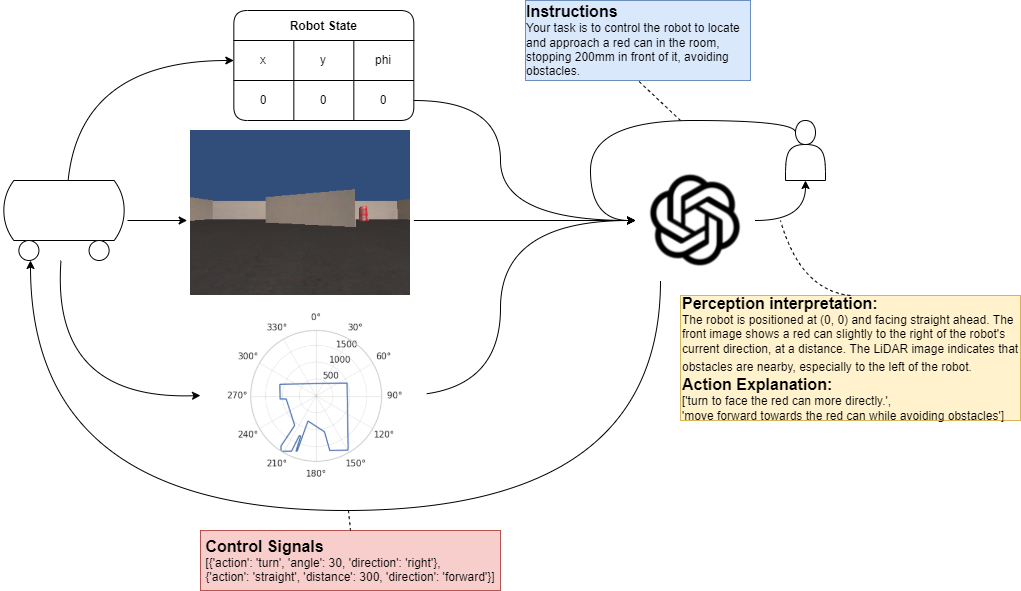

- Perception

- receive sensory data and interpret it in natural language.

- Planning

- generate task plans based on

- natural language instructions and

- perception result.

- generate task plans based on

- Control

- generate actions based on the planning result.

- explain the actions taken in natural language.

- reference for the next action generation.

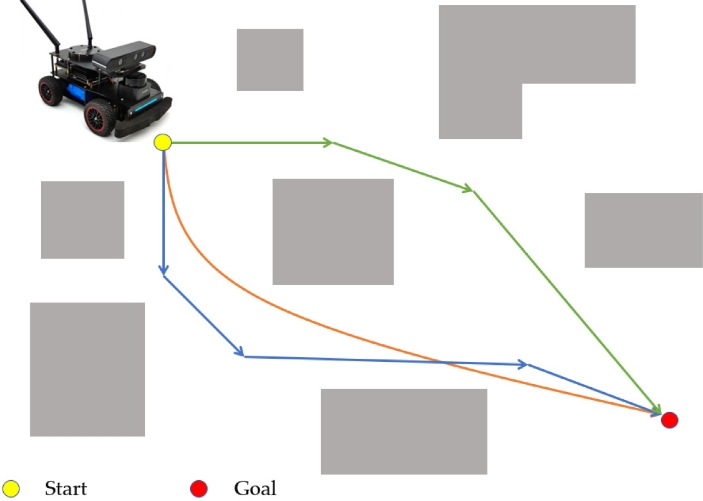

Current Work

LLM for mobile robot navigations using Eyesim