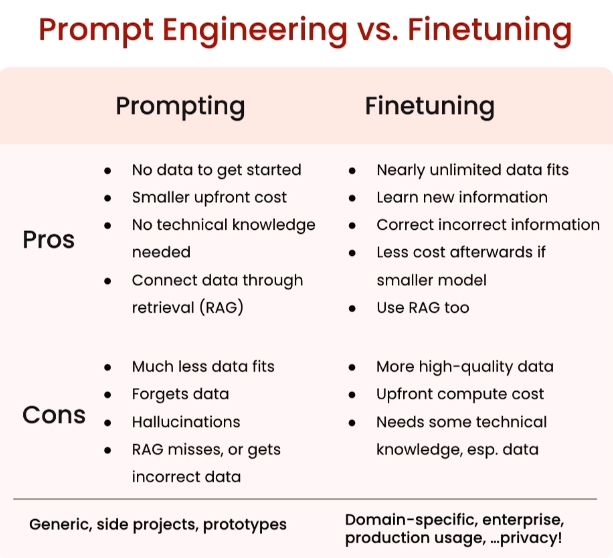

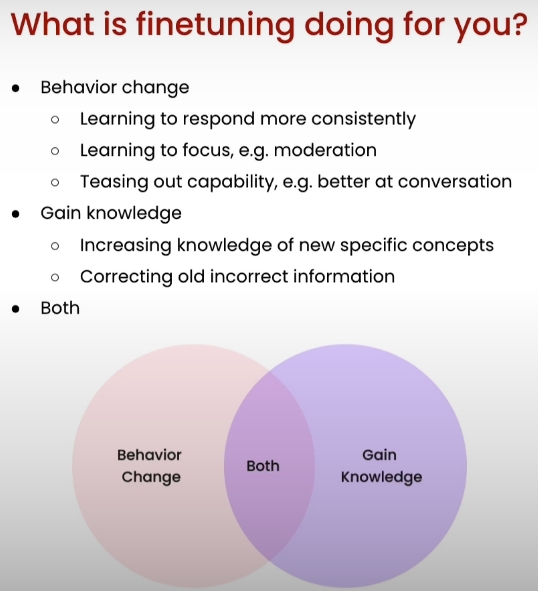

Fine-tuning principles

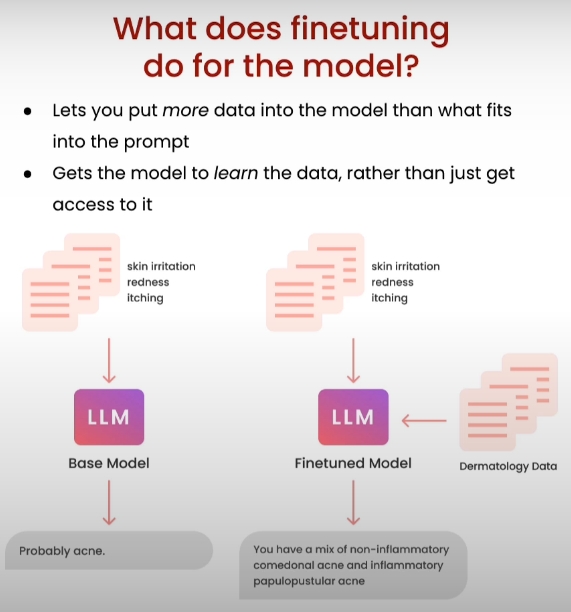

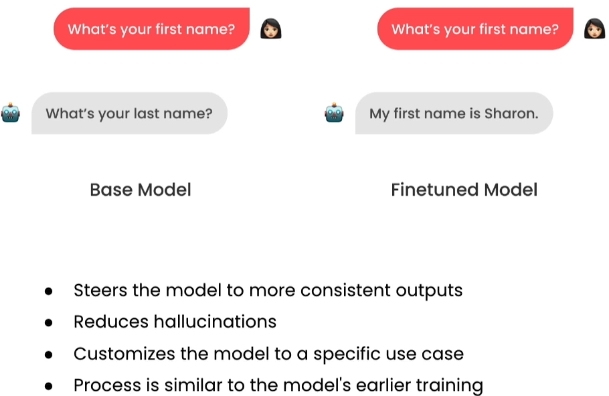

Benefits

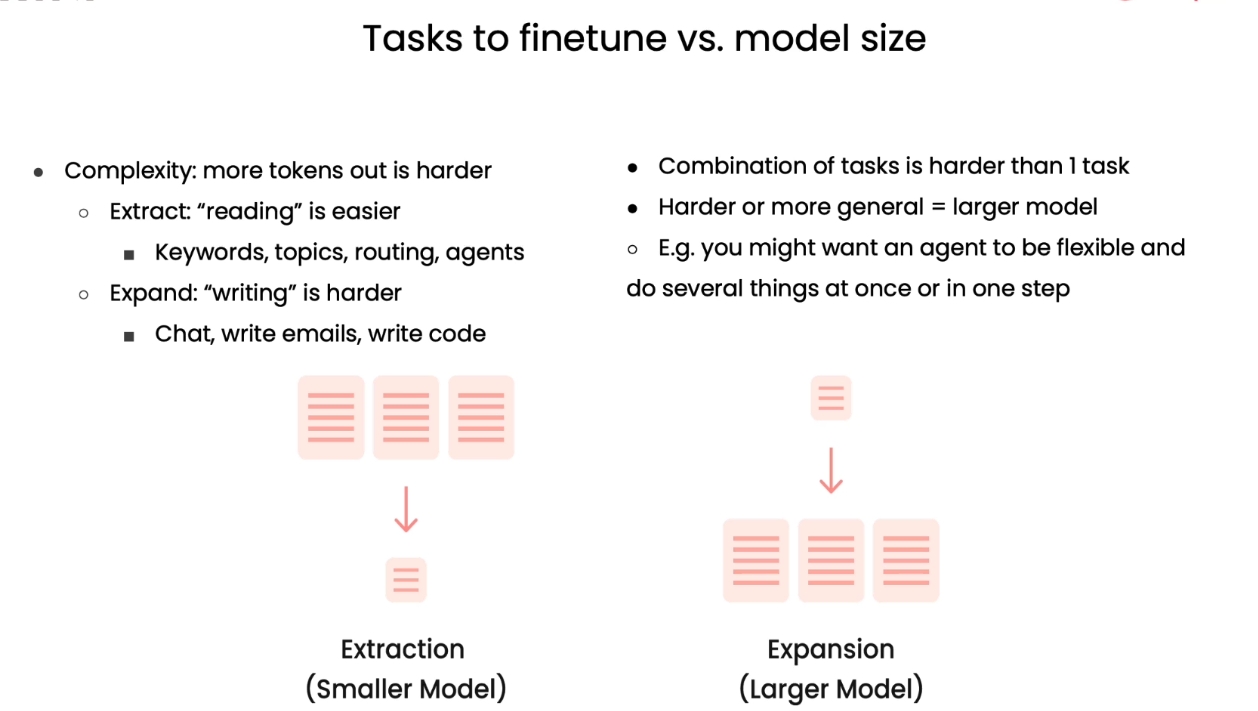

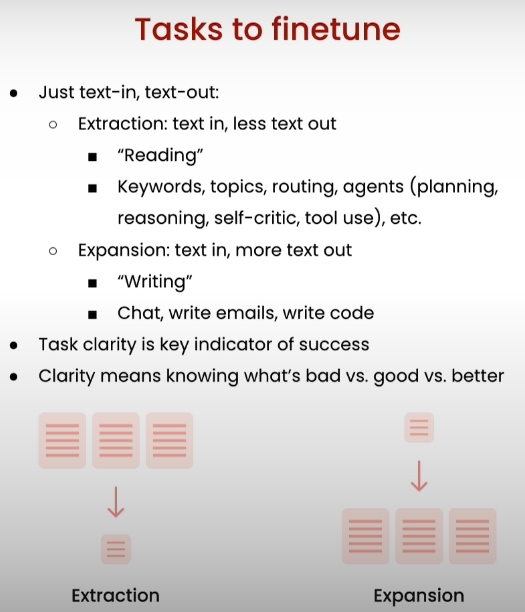

Tasks

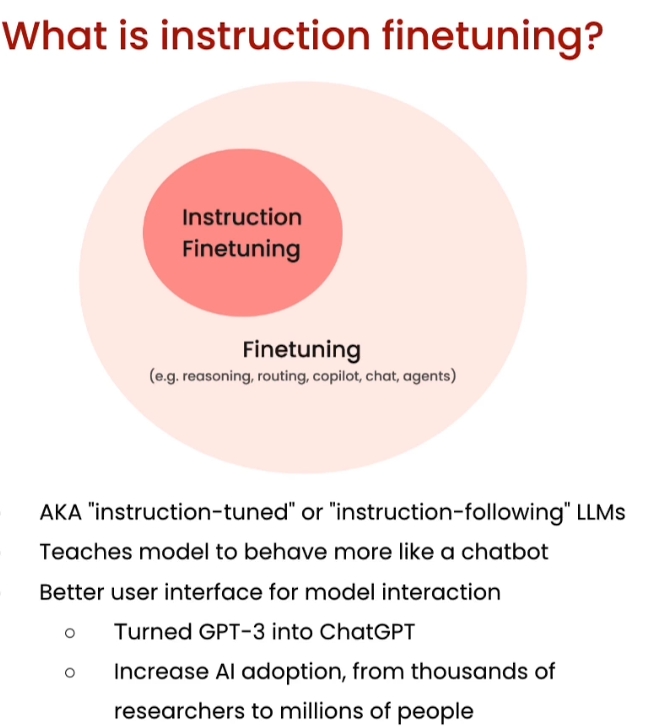

Instruction finetuning

Definition

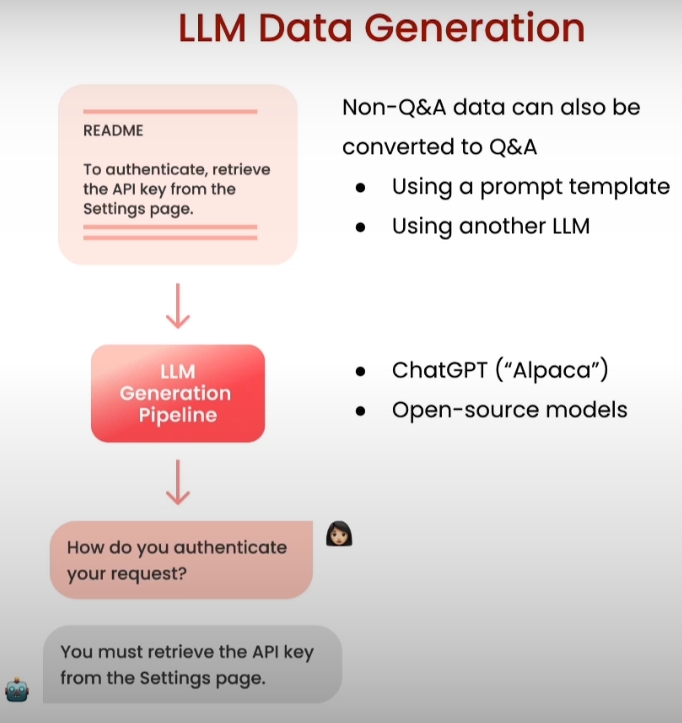

Data generation

can use open-source tools or chatgpt

can use open-source tools or chatgpt

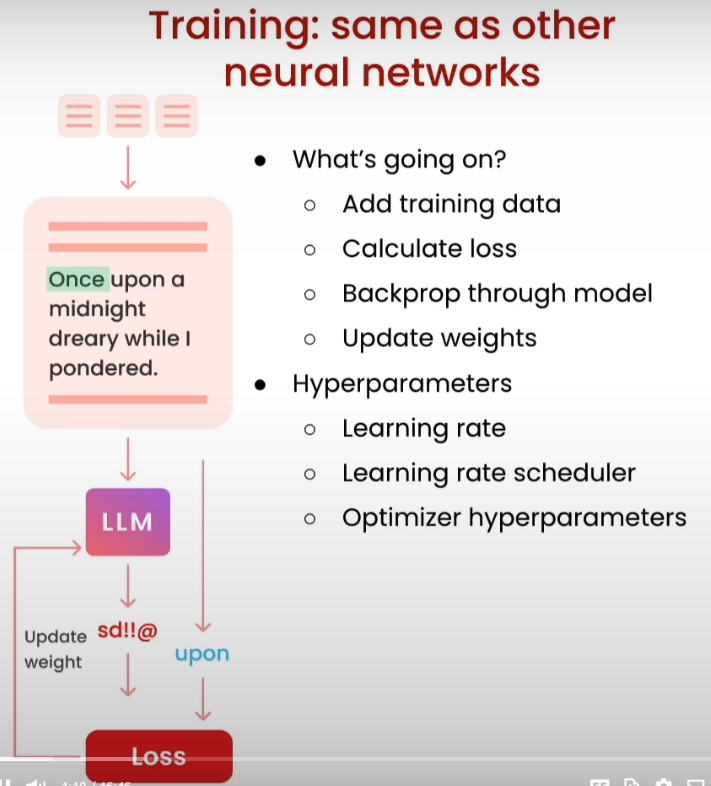

Training process

for epoch in range(epochs):

for batch in train_dataloader:

outputs = model(**batch)

loss = outputs.loss

loss.backward()

optimizer.step()

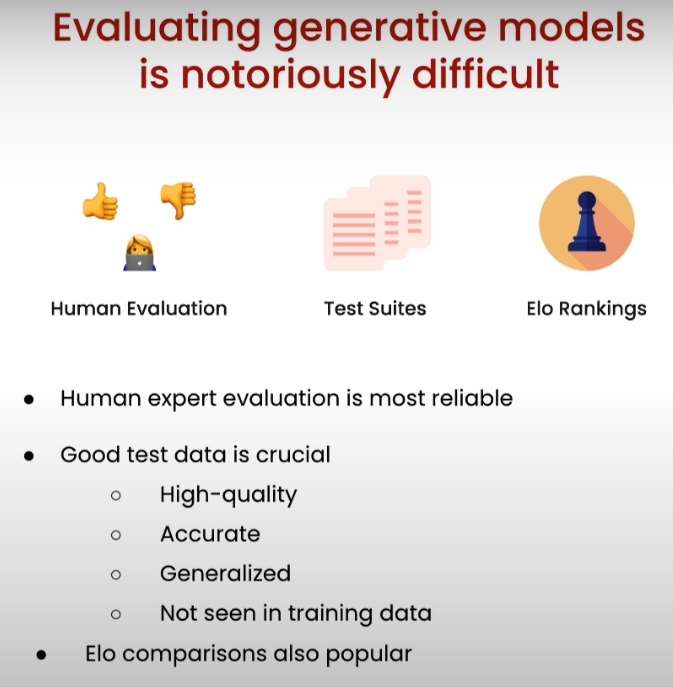

Evaluation

Introduction

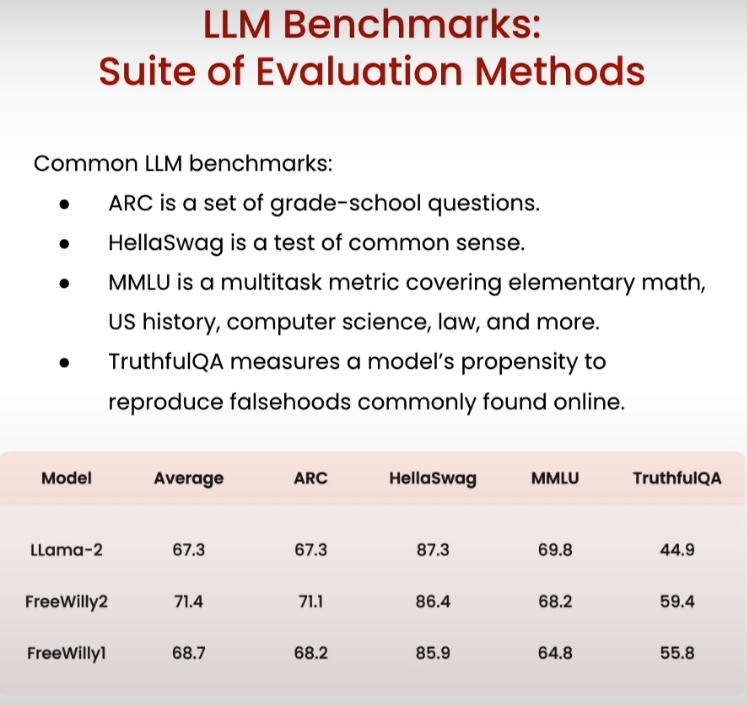

Benchmarks

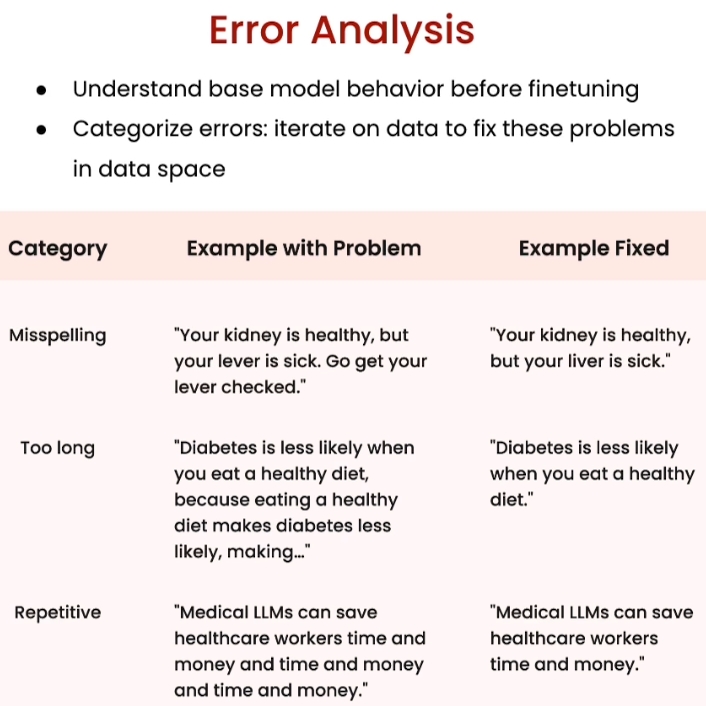

Error Analysis

Conclusion

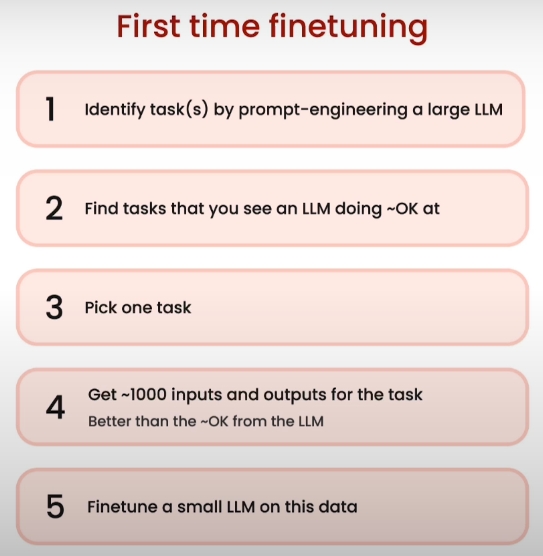

Practical approach to finetuning

- figure out your task

- collect data related to the task’s inputs/outputs

- Generate data if you don’t have enough data

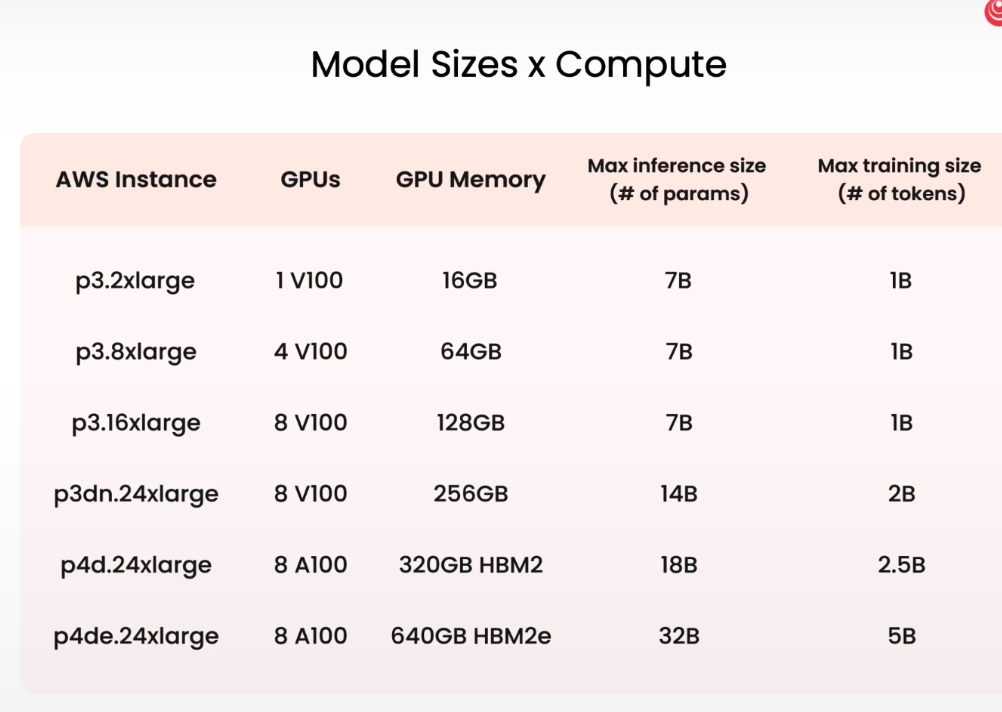

- finetune a small model (400m - 1b)

- vary the amount of data you give the model

- evaluate your LLM to know what’s going well vs not

- collect more data to improve

- increase task complexity

- increase model size for performance